Final Results

Safe reinforcement learning

Advisor: Anna Rafferty

Times: Winter 4a

Background

Imagine you want to make a robot that can place dishes in a dishwasher. It might learn how to do this by trying lots of different ways of manipulating the dishes. Some things it might try could lead to the dishes not getting washed very well - for instance, if all the plates are directly on top of each other. Other things that it might try could lead to dishes actually breaking - say if it tries to put plates into the dishwasher by dropping them from several feet above their desired location. The latter situation is in some sense worse: breaking dishes is monetarily costly, and the dish fragments could harm nearby people or even the robot.

Learning by trying out different possible actions and observing how successful they are is a type of learning known as reinforcement learning. A subfield of reinforcement learning focuses on safe reinforcement learning: ensuring that while the agent is learning, it doesn’t take actions that can have catastrophic consequences. Doing this is hard - in some sense, it requires avoiding things that are dangerous without necessarily knowing which things are dangerous!

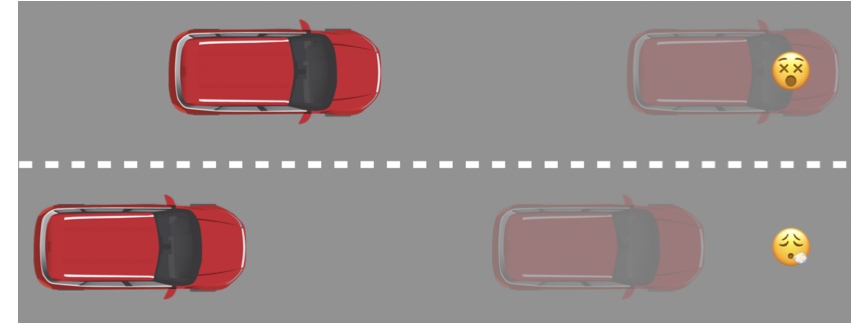

A number of different approaches have been developed. One, for instance, suggests that the agent should "imagine into the near future" so that it can consider what might happen and avoid it (Thomas, Luo, & Ma 2021). Below, an image from that paper shows that in the top scenario, it's too late for the top car to brake and avoid hitting the emoji pedestrian, but in the bottom image, the car still has enough time to act, if it can only recognize that it needs to do so by "imagining" what might happen in several seconds:

Safe reinforcement learning is valuable in a whole range of contexts, from adjusting HVAC control to make greener buildings to deploying robots in inhospitable terrain like the deep sea.

The project

In this project, you’ll be learning about reinforcement learning in general as well as safe reinforcement learning. Your goal will be to use these algorithms in a simulator to better understand how they work and examine the consequences of different approaches for ensuring safety. You’ll also think about where these methods might and might not be appropriate for real-world deployment outside of a simulator.

The progression of the project will look something like the following, where different parts of this progression might be split among different team members:

- Learn about simulators like the OpenAI Gym for exploring and testing reinforcement learning algorithms, and get an agent set up to interact in that environment.

- Learn about several safe reinforcement learning algorithms, and identify what non-safe reinforcement learning algorithm these algorithms rely on.

- Create an agent that interacts with the simulator using the non-safe version of the reinforcement learning algorithm, and one that uses the safe version of the reinforcement learning algorithm.

- Examine the literature to identify real-life applications of safe reinforcement learning.

Depending on the group’s interests and skill sets, you might spend more time using existing libraries that implement some of these safe reinforcement learning methods and understanding the consequences of different approaches, or you might spend more time on implementing particular algorithms.

Deliverables

- A working demo of an agent using some safe reinforcement learning method to learn in a simulated environment.

- Your well-documented source code for your demo and any other safe reinforcement learning approaches you explore.

- A paper or webpage that describes the reinforcement learning algorithms you explored, how well they worked in the simulator, including evaluating how well they learned overall and whether they learned safely, and a discussion about how what you learned and your results is relevant to potentially deploying safe reinforcement learning methods in the real world.

Recommended experience

Having taken CS321: Making Decisions with AI may be helpful background, as could CS320: Machine Learning, but neither is necessary. Willingness to engage with mathematics will also be helpful.

References/inspiration

I’ll provide additional references when we start the project, but here are some relevant papers:

- Brunke, L., Greeff, M., Hall, A. W., Yuan, Z., Zhou, S., Panerati, J., & Schoellig, A. P. (2022). Safe learning in robotics: From learning-based control to safe reinforcement learning. Annual Review of Control, Robotics, and Autonomous Systems, 5, 411-444.

- Eysenbach, B., Gu, S., Ibarz, J., & Levine, S. (2018). Leave no Trace: Learning to Reset for Safe and Autonomous Reinforcement Learning. In 6th International Conference on Learning Representations (ICLR 2018).

- Garcıa, J., & Fernández, F. (2015). A comprehensive survey on safe reinforcement learning. Journal of Machine Learning Research, 16(1), 1437-1480.

- Gu, S., Yang, L., Du, Y., Chen, G., Walter, F., Wang, J., ... & Knoll, A. (2022). A review of safe reinforcement learning: Methods, theory and applications. arXiv preprint arXiv:2205.10330.

- Moldovan, T. M., & Abbeel, P. (2012, June). Safe exploration in Markov decision processes. In Proceedings of the 29th International Coference on International Conference on Machine Learning (pp. 1451-1458).

- Qiu, D., Dong, Z., Zhang, X., Wang, Y., & Strbac, G. (2022). Safe reinforcement learning for real-time automatic control in a smart energy-hub. Applied Energy, 309, 118403.

- Thomas, G., Luo, Y., & Ma, T. (2021). Safe reinforcement learning by imagining the near future. Advances in Neural Information Processing Systems, 34, 13859-13869.