Main Focus

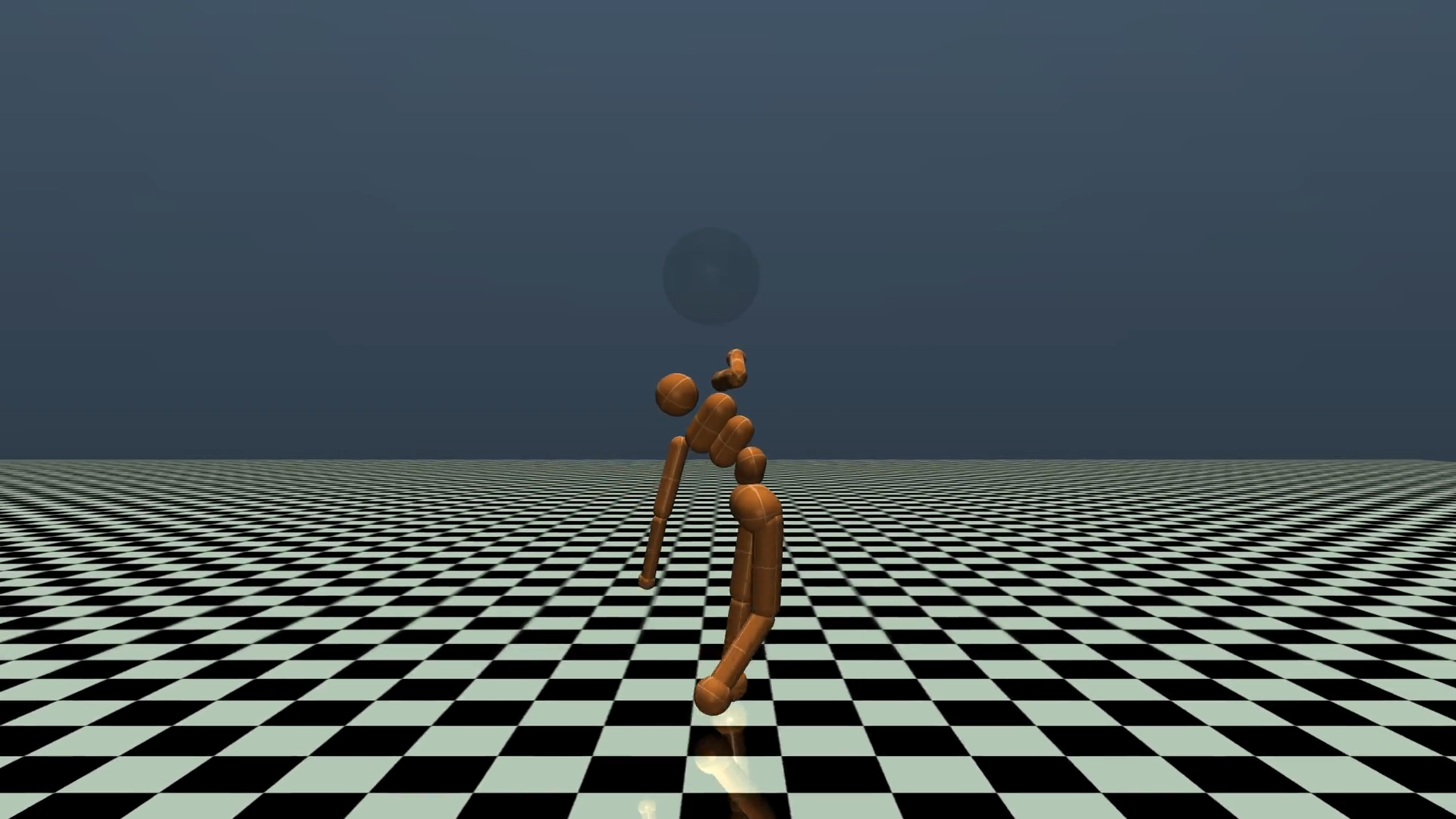

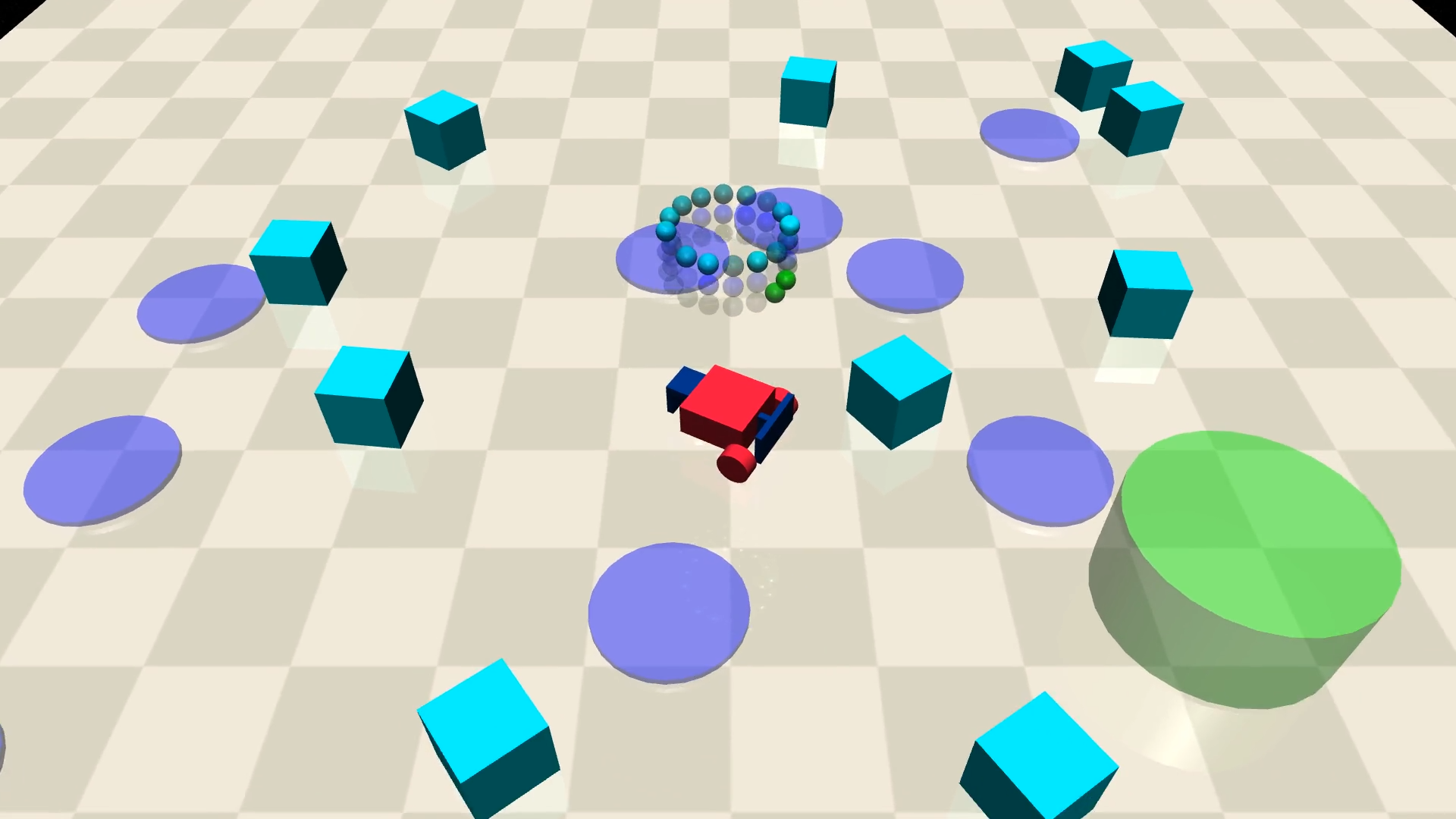

We wanted to investigate how altering hyperparameters of typical safe reinforcement learning algorithms would impact returns and costs for different agents and tasks. We used Omnisafe, an infrastructural framework for Safe Reinforcement Learning to run all of our experiments. They provide pre-built algorithm implementations, safety adapters, environments from Safety Gym, and documentation on how to use their software. OmniSafe also integreates with Weights and Biases, a logging tool for ML research. We chose to run our tests on SafetyHumanoidVelocityv1 and SafetyCarGoal2v0 as the have different safety measures and different levels of complexity. This allows us to test for differet definitions of safety and compare algorithm performance.