Final Results

Can you explain your answer?: Making sense of machine learning models

Advisor: Anna Rafferty

Times: Winter 4a

Background

Machine learning models are increasingly able to correctly identify objects in images, suggest what material a student should review to address gaps in their understanding, or label a movie review as a rant or a rave. But increasing accuracy has also tended to bring increasing complexity and decreasing interpretability. This lack of interpretability poses a significant problem when considering adopting these models for widespread use. If, for instance, a model suggests that a student should review concept A, but she thinks she already knows that concept, she may not trust the model’s recommendation unless she knows why that recommendation was made.

Being able to interpret a model may also make it easier to identify why a model is making incorrect predictions. For example, if a model is mainly identifying whether an image is of a dog or wolf based on pixels in the background of the image, rather than pixels that are in the dog or wolf, it suggests the model may not really have learned the difference.

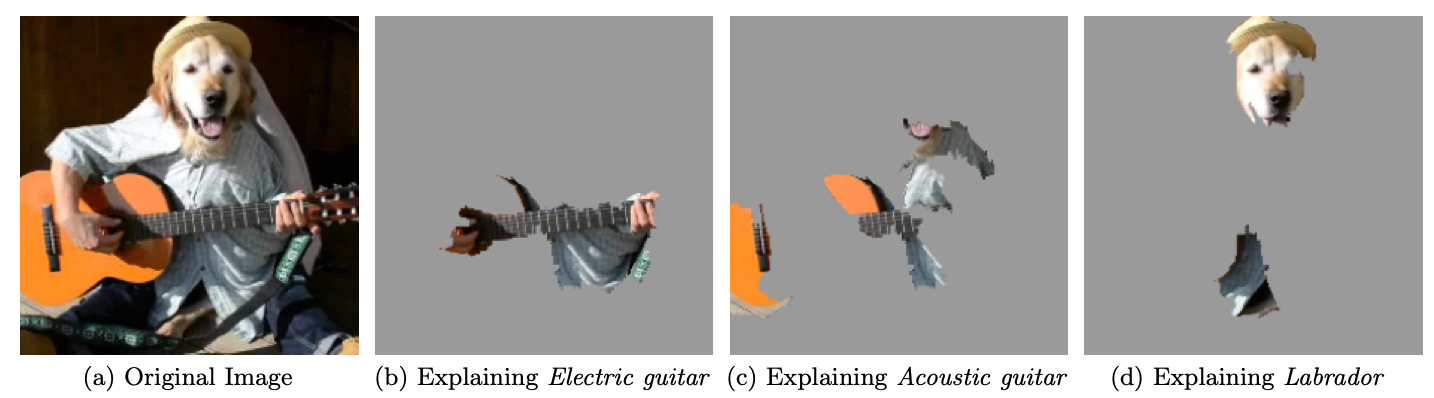

Explainable AI (XAI) approaches have mainly focused on two areas: (1) building models that are intrinsically explainable, and (2) developing algorithms to provide a post hoc explanation for a model’s output. One example of a method that tries to explain a model's output post hoc is LIME. The picture below show the full image on the left, and then the right three panels show what parts of the image LIME identified as leading to the model's output that the image might contain an electric guitar, an acoustic guitar, and a labrator (image from Ribeiro, Singh, & Guestrin 2016):

Even though the output of an electric guitar is wrong, the fact that the string's of the guitar are contributing to that output is reassuring in terms of the overall trustworthiness of the model.

The project

In this project, you’ll be learning about and applying post hoc explainable machine learning methods. Specifically, you will:

- Learn about at least three post hoc explainable machine learning methods, including both their technical details and why they might be useful.

- Identify at least two domains or model(s) where you can apply these methods.

- Compare the output of the explainable learning methods across the domains/models, examining their similarities and differences.

- (Time permitting) Conduct a user study about one or more explainability methods using the approach in one of the papers you read.

Deliverables

- Your well-documented source code for using the explainable AI approaches and comparing their results.

- A paper or webpage that describes the explain AI methods, your process for applying them to a particular domain or model and your hypotheses about what you would find, your results, and a discussion of the implications of this work for using post hoc explainable AI methods in different contexts.

Recommended experience

It would be helpful but not required if at least one member of the group has taken a machine learning or artificial intelligence course. XAI approaches tend to require multidisciplinary thinking, so having a group with a mix of experiences, including perhaps some psychology, human-computer interaction, and linear algebra is also likely to be helpful. Willingness to think deeply about what an "explanation" is and the consequences of deploying black-box AI and machine learning models in the real world is crucial.

References/inspiration

I’ll provide additional references when we start the project, but here are some relevant papers:

- Dieber, J., & Kirrane, S. (2020). Why model why? Assessing the strengths and limitations of LIME. arXiv preprint arXiv:2012.00093.

- Hase, P. & Bansal, M. (2020). Evaluating Explainable AI: Which Algorithmic Explanations Help Users Predict Model Behavior?. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pages 5540–5552, Online. Association for Computational Linguistics.

- Lundberg, S. M., & Lee, S. I. (2017). A unified approach to interpreting model predictions. Advances in neural information processing systems, 30.

- Mohseni, S., Zarei, N., & Ragan, E. D. (2021). A multidisciplinary survey and framework for design and evaluation of explainable AI systems. ACM Transactions on Interactive Intelligent Systems (TiiS), 11(3-4), 1-45.

- Ribeiro, M. T., Singh, S., & Guestrin, C. (2016, August). " Why should I trust you?" Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining (pp. 1135-1144).

- Speith, T. (2022, June). A review of taxonomies of explainable artificial intelligence (XAI) methods. In 2022 ACM Conference on Fairness, Accountability, and Transparency (pp. 2239-2250).