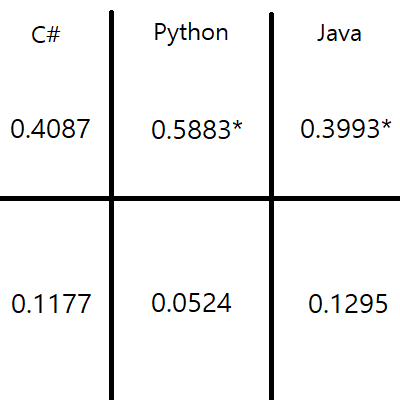

Results

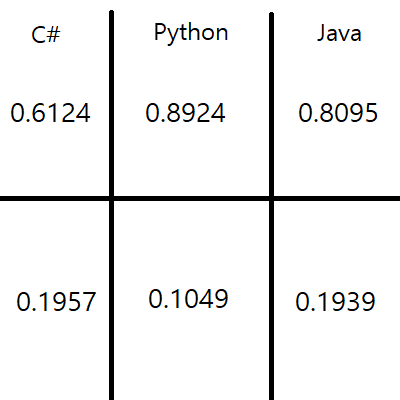

We tested the BVAE and its baselines on datasets of C#, Java, and Python code extensively.

We were quite satisfied with the results, which even outperformed the source paper in the summarization task. These results are detailed below.