About the Project

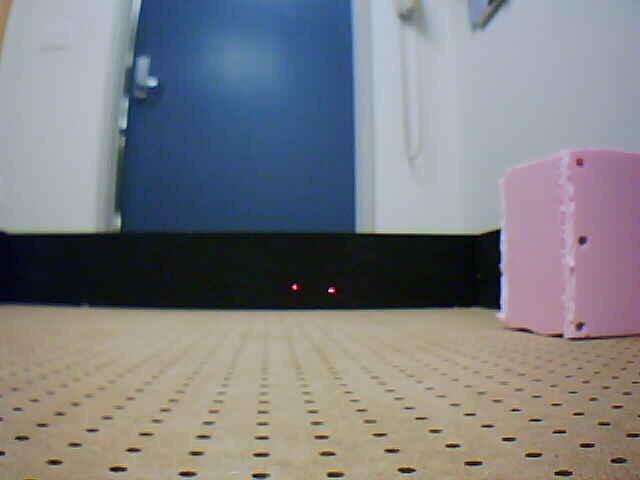

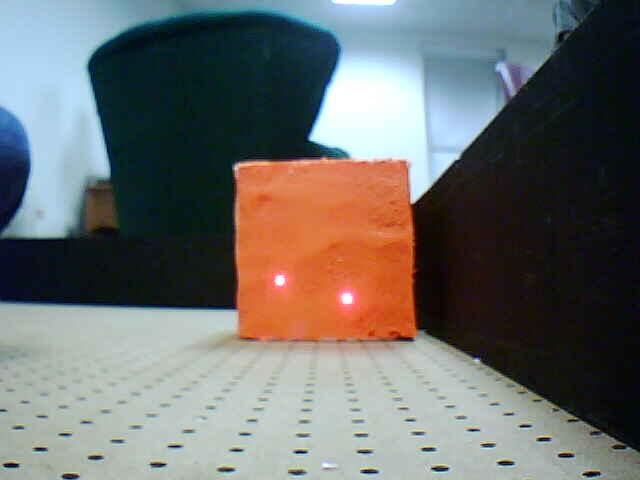

Armed with the mighty Surveyor SRV-1 robot, we began by defining some specifications for our project. The robot has a camera onboard, two laser lights, and can drive forward, backward, and turn left and right. We decided to simplify the project by defining our environment to consist of black walls, orange hazards, and pink victims set on a 8'x4' grid. We approached this problem by deciding to divide the grid into 8"x8" squares, a decision justifiable due to the small nature of the robot and the divisibility of the dimensions by 8.

General Strategy

We decided to pursue a search algorithm that would be guaranteed to discover every object on the map. Our strategy, then, became to find a wall and trace our way around it (moving one grid space at a time) until we had returned to our original starting position. From there, we divided the interior and explored it as well.

Initially, we had hoped to load all code directly on to the robot. Unfortunately, firmware difficulties prevented this from happening. So, we decided to remotely control the SRV-1 from a computer.

Algorithms

Distance - Our algorithm for distance calculation:

- Turn on lasers

- Take picture

- Locate lasers on picture. We did this by dividing the picture in two, and exploring outward from the middle while looking for "blobs" of red and white-- the colors that the lasers gave off.

- Find the horizontal distance in pixels between the two lasers.

- This pixel distance is inversely proportional to the actual distance from the robot to the wall. We experimentally determined the parameters for this equation and plugged this pixel distance into a function to return the actual physical distance.

Recalibration- Because of the indeterminate nature of our robot's movement, it became necessary to recalibrate our robot to guarantee that we remained perpendicular to the walls.

We did this using a horizon-detection algorithm which we devised. This may be outlined as follows:

- Take picture

- Find several (ten in our case) points along the border between the floor of the grid and the walls. We did this using our assumption that the walls would always be very dark and the the floor would be relatively light. We used a color differentiation algorithm that looked for long, vertical stripes of dark which would indicate this border.

- Calculate the (x,y) coordinates of these points, then perform a regression analysis on the lines formed by them.

- If the standard deviation of the line was too great, try assuming that we were at a corner, and look for two lines.

- If this still did not yield statistically significant results, restart to step 1 and hope for a better picture.

- If it did find one or two good lines, check to see whether the absolute value of the slope was close to zero. If so, then we may assume we are properly aligned.

- If not, rotate an amount correlated with the slope of the line in order to straighten. Repeat entire algorithm until slope is close to zero.

Movement- Our movement algorithm can be visualized as a blind man walking with his right hand on the wall. At any grid point, we would begin by turning to the right and seeing if we were facing a wall (aka if our distance calculation showed a distance less than 8). If so, the we would turn left again and move forward as long as the space in front of us was clear. Repeat until environment fully explored.

Object Recognition - In order to identify the victims, hazards, and walls in the environment, we needed to be able to determine what we were looking at. We color-coded our objects to make this task more manageable. When we were facing an object, took a picture and looked at a 3x3 grid of pixels and averaged the RGB values of the nine pixels. We then compared these values to an experimentally-determined mapping for the objects in the world.

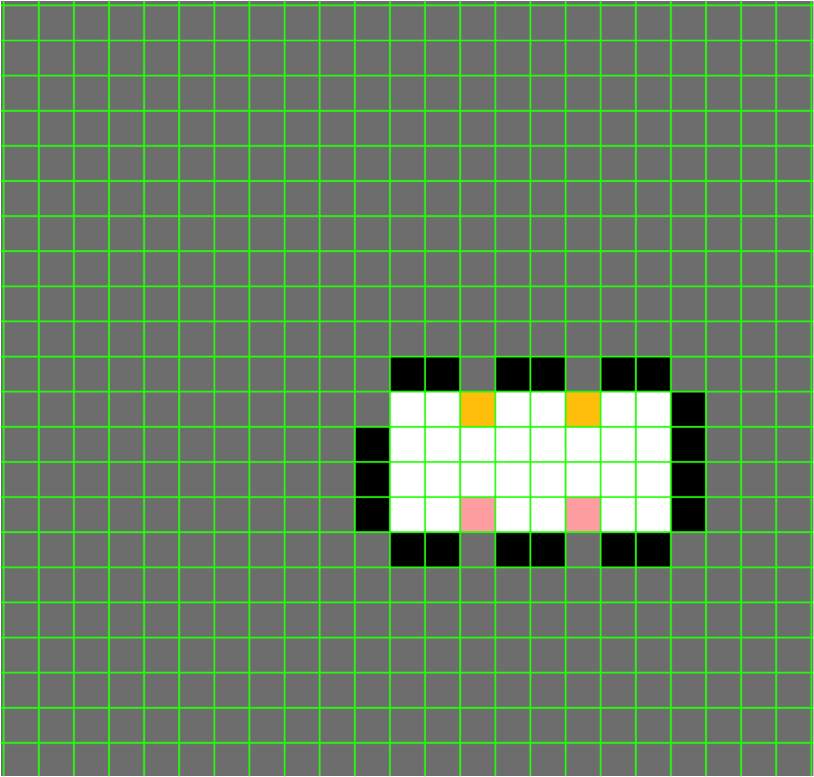

Mapping - Every time a movement command was issued, it would be relayed to our realtime mapping software suite which would then reflect the new information garnered by this change. For instance, a move forward would guarantee that the grid space in front of the previous location was marked as open. Alternatively, detecting a wall or victim or hazard in front of the robot would imply that this space was blocked. In all cases, the map relayed to the computer would show the most up-to-date knowledge of the environment, allowing potential rescuers to have a good idea of the situation before they entered.

|