Project Overview

Sensors

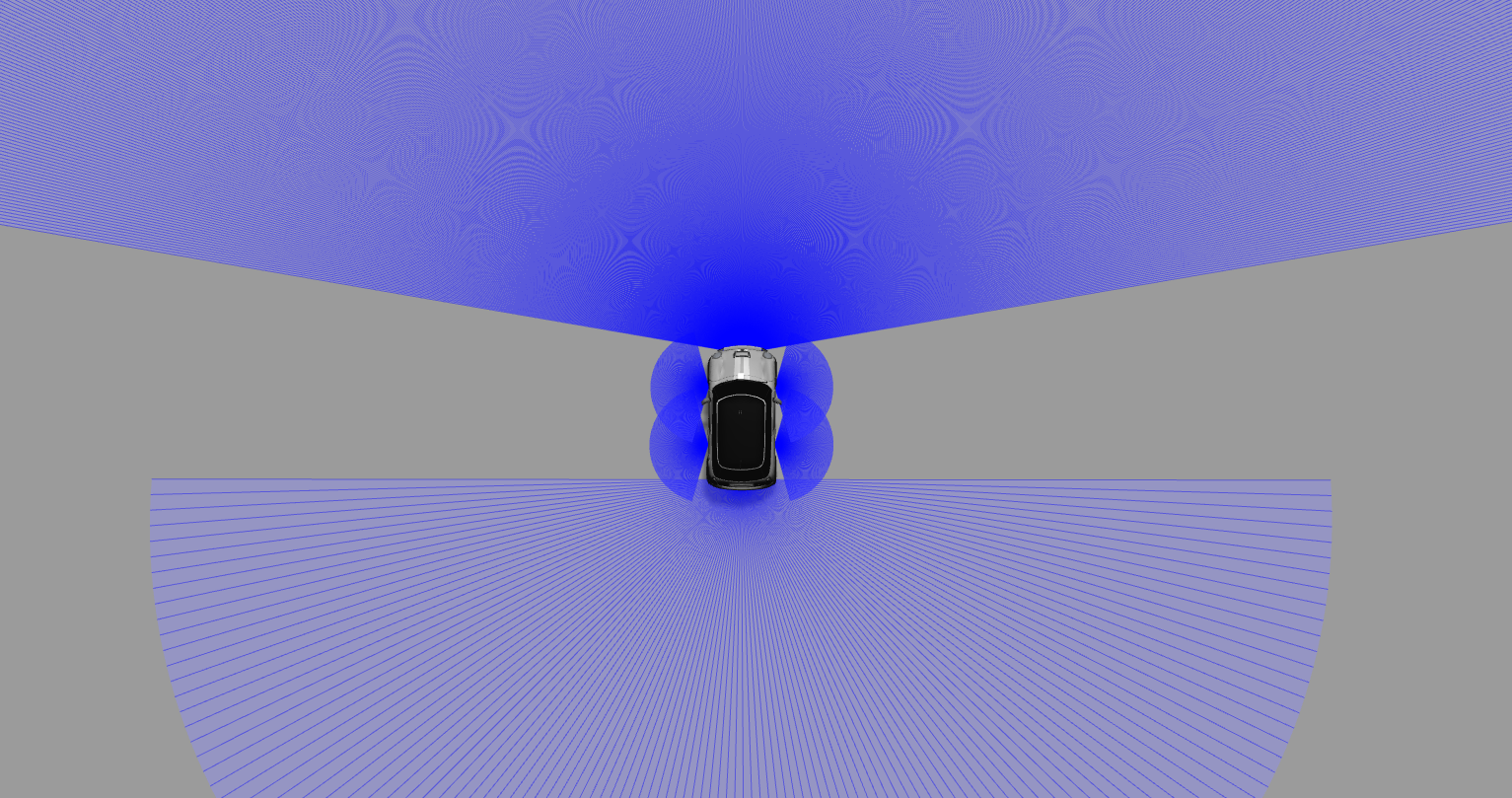

All good self driving cars need sensors, and we have quite a few of them. We used LIDAR (laser RADAR), GPS, and a front mounted camera to give our algorithms all the data they can handle. Pictured below is a visualization of the LIDAR sensor our car uses.

Finite State Machine

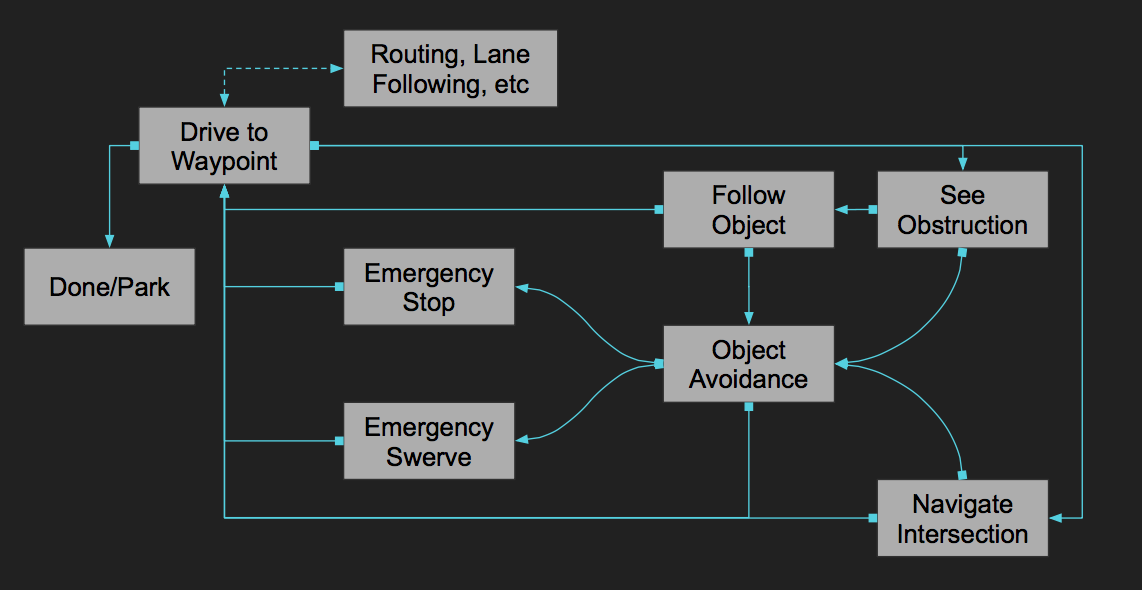

The logic for the car was based on a finite state machine, with transitions defined based on the information gathered from the various sensors. The states included waypoint driving (point A to point B to point C etc), follow (trailing cars in front), avoidance (navigate around obstacles), intersection (move through intersections), and emergency stop/swerve. Combining these states with routing, lane detection, etc gave a functional car in a sparse urban environment.

Data collected from the LIDAR sensors can also identify other objects in the road ahead of the car, which are tracked along with their estimated speed and direction. Information about these objects is used to identify potential hazards and informs the logic for transitioning between finite states.

Lane and Object Detection

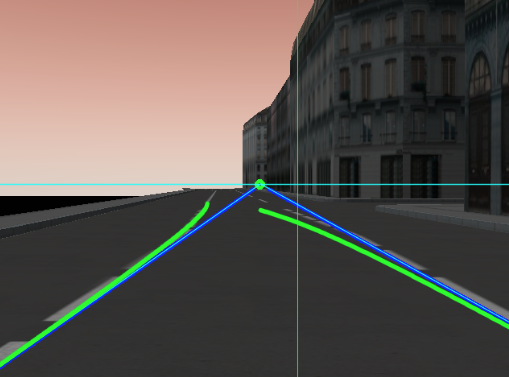

We developed a custom algorithm for lane detection that was based on the work of Park et al. (2001).

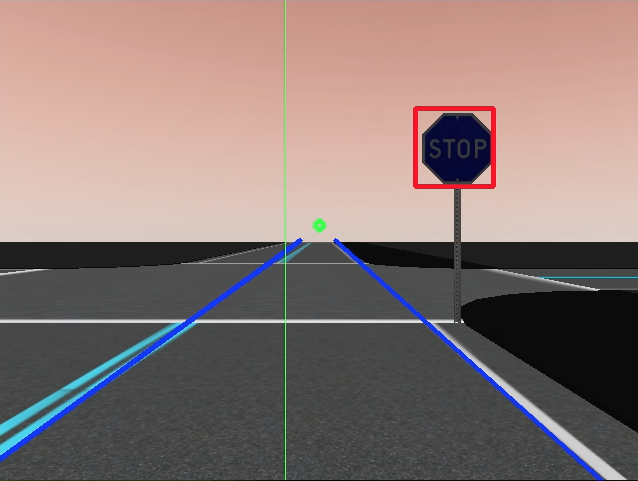

We also employed a Haar Cascade Classifier to detect stop signs. We would like to thank Andrew Turksanski for the trained stop sign cascade, which was developed for the University of Utah's submission to the DARPA Urban Grand Challenge.

Self-Parking Car

We implemented two different self-parking algorithms: back perpendicular and parallel. They work using the lidar sensors around the car.

Routing

We implemented Dijkstra's algorithm on a graph of intersections as vertices to generate and follow the shortest path to our destination.